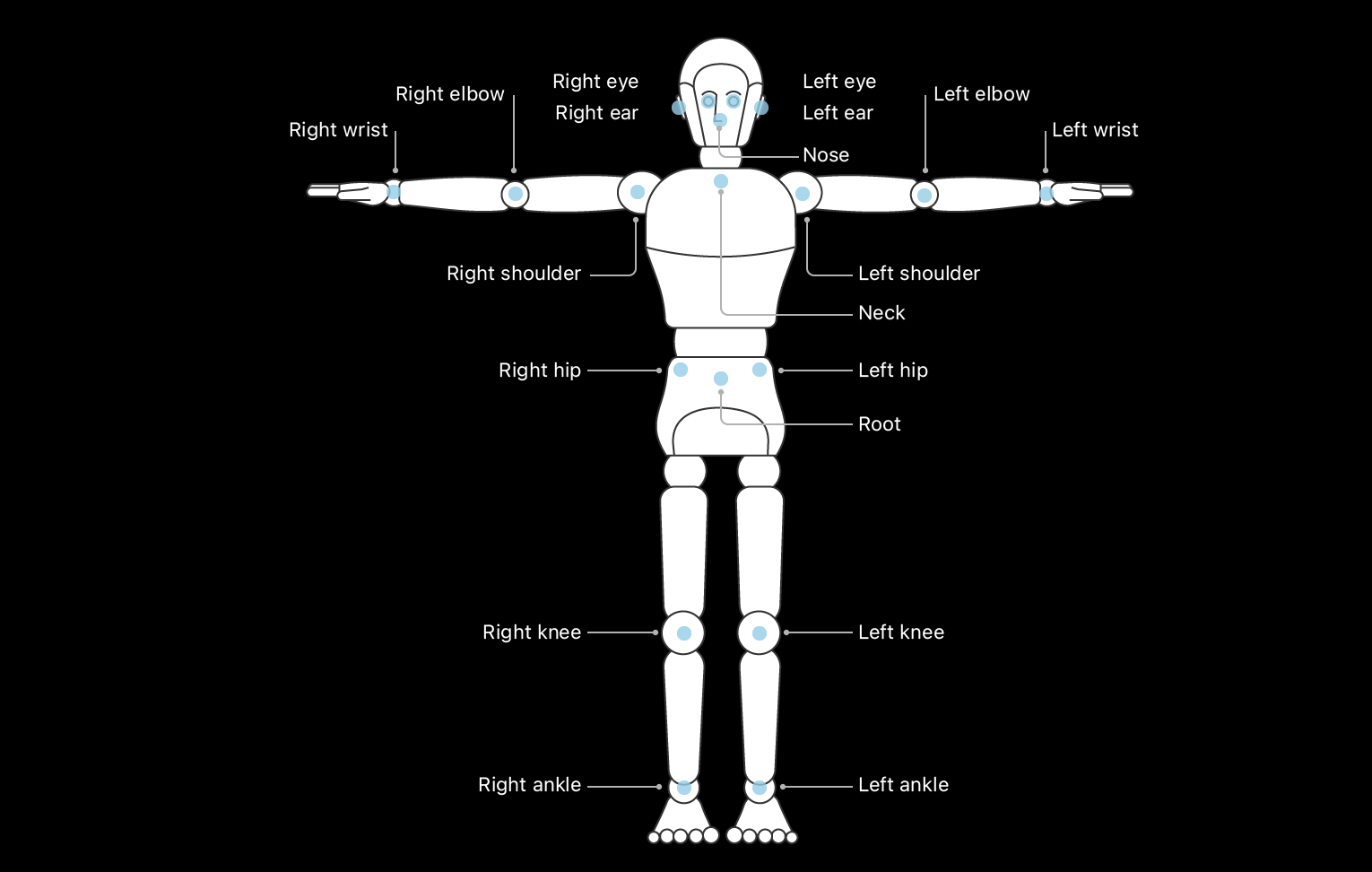

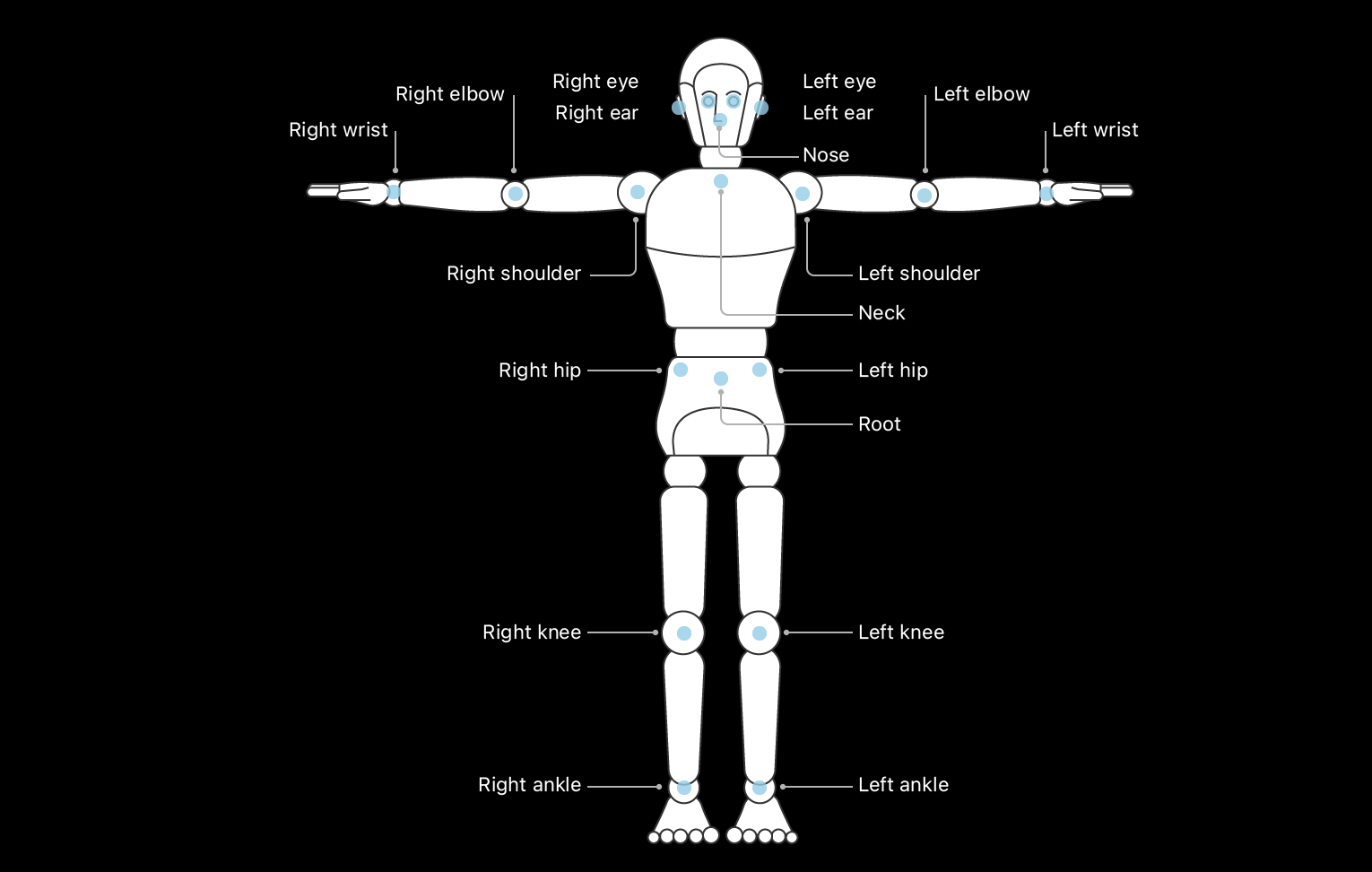

从iOS 14和macOS 11开始,Vision增加了识别人体姿势的强大功能。他可以识别人体的19个关键点。如图所示:

实现

1.发起一个请求

使用Vision框架,通过VNDetectHumanBodyPoseRequest提供身体姿势检测功能。 下面代码演示了,如何从CGImage中检测身体关键点。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

guard let cgImage = UIImage(named: "bodypose")?.cgImage else { return }

let requestHandler = VNImageRequestHandler(cgImage: cgImage)

let request = VNDetectHumanBodyPoseRequest(completionHandler: bodyPoseHandler)

do {

try requestHandler.perform([request])

} catch {

print("Unable to perform the request: \(error).")

}

|

2.处理结果

请求处理完之后,会调用完成的闭包,通过闭包,可以获取到检测结果和错误信息。 如果正常检测到人体关键点,将以VNHumanBodyPoseObservation数组的形式返回。VNHumanBodyPoseObservation中包含识别到的关键点和一个置信度分数,置信度越大,说明识别的精度越高。

1

2

3

4

5

6

7

8

9

| func bodyPoseHandler(request: VNRequest, error: Error?) {

guard let observations =

request.results as? [VNHumanBodyPoseObservation] else {

return

}

observations.forEach { processObservation($0) }

}

|

3.获取关键点

可以通过VNHumanBodyPoseObservation.JointName来获取对应的关键点的坐标。注意,recognizedPoints(_:) 方法返回的点取值范围[0, 1],原点位于左下角,实际使用中需要进行转换。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

| func processObservation(_ observation: VNHumanBodyPoseObservation) {

guard let recognizedPoints =

try? observation.recognizedPoints(.torso) else { return }

let torsoJointNames: [VNHumanBodyPoseObservation.JointName] = [

.neck,

.rightShoulder,

.rightHip,

.root,

.leftHip,

.leftShoulder

]

let imagePoints: [CGPoint] = torsoJointNames.compactMap {

guard let point = recognizedPoints[$0], point.confidence > 0 else { return nil }

return VNImagePointForNormalizedPoint(point.location,

Int(imageSize.width),

Int(imageSize.height))

}

draw(points: imagePoints)

}

|

拓展

除了使用Vision的VNDetectHumanBodyPoseRequest来做人体姿势识别之外,还可以使用CoreML来实现。官方示例:Detecting Human Body Poses in an Image ,此示例可以运行在iOS 13及以上版本。